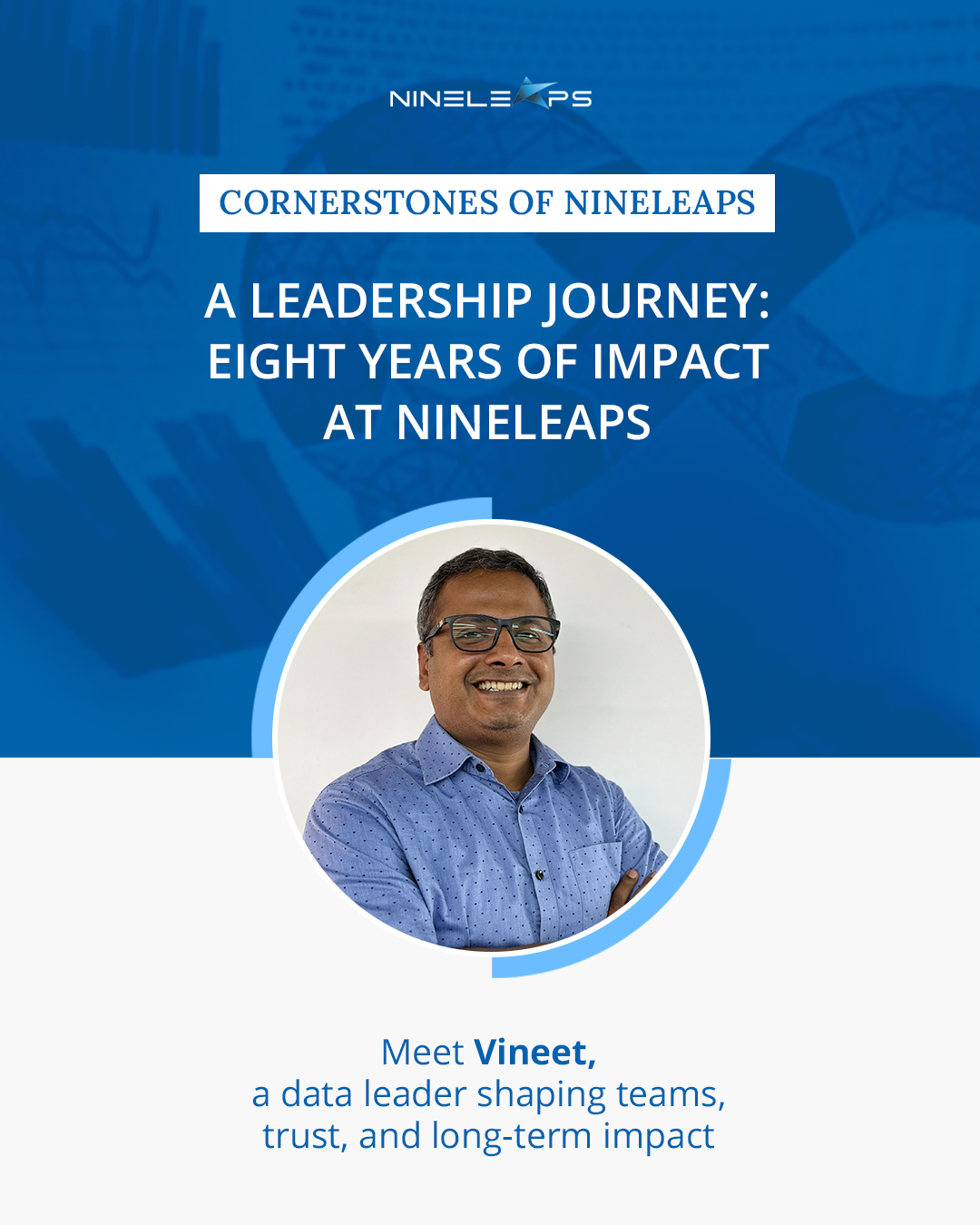

A Leadership Journey: Eight Years of Impact at Nineleaps

Careers are often measured in titles and timelines. Real impact, however, shows up in the moments between. It appears in the teams you build, the people you grow, and the values that hold when things get hard.In this quarter’s IMPACT story, we sit down with Vineet Punnoose, Senior Vice President - Data Engineering at Nineleaps. He reflects on eight years of growth, leadership, and lessons learned while building teams, client ecosystems, and a culture rooted in trust. Q: Can you introduce yourself and tell us your role at the company?Hi, I’m Vineet Punnoose, and I currently serve as Senior Vice President, Data Engineering at Nineleaps. In this role, I focus on growing strong engineering teams, shaping client ecosystems, and ensuring that what we build creates meaningful impact for both our clients and our people.Q: How long have you been with the company, and what initially attracted you to this position?I’ve been with Nineleaps for eight years. I joined as Senior Manager of Data Engineering and Operations, drawn by the company’s energy and its belief in innovation paired with people development. From the start, it felt like more than a role. It felt like a chance to build something meaningful with people who genuinely cared about doing things right.Q: What is your day-to-day like in your current role?No two days look the same. My time is split between assessing team health, participating in sales pitches, and exploring new technology stacks. I move between strategic conversations and hands-on problem-solving with teams. That mix of people, technology, and ideas is what keeps the work exciting.Q: How have you grown professionally since joining the company?I’ve grown from Senior Manager to Senior Vice President, but the real growth goes far beyond titles. Over time, I’ve learned to think bigger, trust teams more deeply, and take on responsibilities that pushed me outside my comfort zone. Each step came with challenges that shaped how I lead and how I approach decision-making.Q: What skills have you developed or strengthened during your time here?The growth has been multidimensional.Technical skills: Staying hands-on with engineering to remain relevant.Product thinking: Understanding the full product lifecycle and business impact.Team building: Creating environments where people can grow, not just perform.Leadership and soft skills: Communication, empathy, negotiation, and emotional intelligence, skills that matter most when leading at scale.Q: How would you describe the company culture?It’s a culture where effort and ideas matter more than hierarchy. The open-door policy is real. Anyone can speak to anyone. Contributions are valued over titles, and people are treated as whole individuals, not just roles on an org chart.Q: Can you share an example of when the company’s values aligned with your personal values?Nineleaps balances ambition with responsibility. From flexible work policies to investments in learning and clear growth paths, the company consistently demonstrates that sustainable growth and people-first thinking can coexist. That alignment has reinforced my belief in building organizations that care deeply while aiming high.Q: What do you enjoy most about working here?The people. I genuinely look forward to work because of the conversations, collaboration, and shared sense of purpose. When you’re surrounded by people who inspire and support you, work becomes meaningful rather than transactional.Q: What has been the most rewarding project you’ve worked on?Building the Uber team stands out. Watching it grow from 40 members to over 150 has been incredibly fulfilling. Beyond the scale, what mattered most was seeing individuals step into leadership, tackle complex challenges, and grow alongside the organization.Q: Can you share a challenge and how the company supported you?My daughter suffered a severe accident and was hospitalized in the ICU. During that time, I was struggling to balance critical work commitments and being there for my family. Without hesitation, my manager stepped in, took over responsibilities, and asked me to focus entirely on my daughter’s recovery. That moment defined what our culture truly means. Support when it matters most.Q: What has it been like working closely with the leadership team?It’s been empowering. Transparency and accessibility allow us to understand not just decisions, but the thinking behind them. That exposure has helped me become a more thoughtful and effective leader.Q: What does it mean to head an entire client ecosystem?It is a responsibility I take seriously. The role is about bridging client aspirations with delivery excellence, anticipating needs, building trust, and creating partnerships that extend beyond individual projects.Q: How has your experience been working across teams and departments? Highly collaborative. Silos don’t define how we work. Engineers, product managers, sales, and HR all bring different perspectives, and the best solutions emerge when those viewpoints come together.Q: What advice would you give someone considering joining Nineleaps?Come with a growth mindset. This is a place where you’re challenged and supported in equal measure. Speak up, take ownership, and invest in relationships. The people here are what make the journey meaningful.Q: What makes Nineleaps stand out in the industry?A genuine people-first culture, real meritocracy, a strong focus on innovation, deep client partnerships, and growth that never compromises wellbeing. That balance is rare, and it’s what makes the difference. Looking back on eight years at Nineleaps, Vineet’s journey reflects more than professional advancement. It tells a story of trust, growth, and leadership rooted in empathy. From building large-scale teams to navigating deeply personal challenges, his experience underscores a simple truth. The strongest organizations are built when people are supported as humans first.As Nineleaps continues to grow, stories like these remind us that impact is measured not just in outcomes, but in how we show up for one another along the way.

Learn More >